If you are unsure who is implementing and enforcing the EU AI Act and what the specific time frames are, you might find this post—and our post on the responsibilities of the European Commission (AI Office)—very helpful. The tables below provide you with a comprehensive list of all obligations and tasks that the AI Act places upon Member States.

Crosspost from: The AI Act: responsibilities of the EU Member States by Kai Zenner. We have reformatted the content for web and performed some editing for readability.

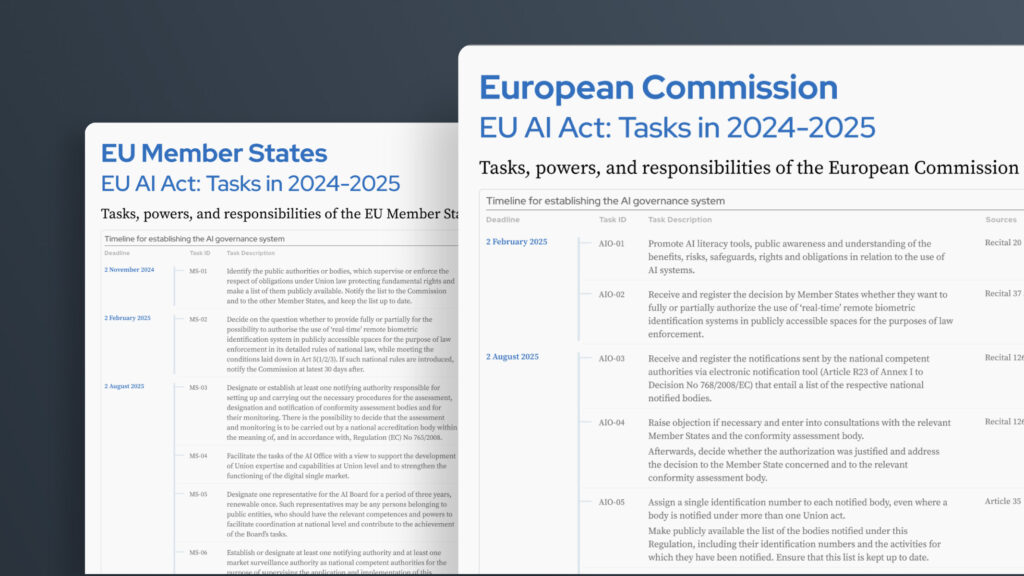

Tasks in 2024-2025

See a timeline of all key tasks required by the EU AI Act in 2024 and 2025, with references to the sources.

Since the technical negotiations on the AI Act have been concluded in January 2024, I hear very different numbers and deadlines when it comes to secondary legislation and other implementing and enforcement tasks on the EU and national level. No one seems to know what role the AI Office, Member States, and public authorities have to play from now on. So, I spent the last two weeks reading the AI Act, while looking for the obligations that the law gives to Member States and the respective time frames to fulfil those tasks.

The result: similar to the AI Office, the national level faces many new obligations with sometimes very tight deadlines. In total, I have identified 88 responsibilities for the national level:

- Table A: 18 tasks with the aim to establish an AI governance system, to be executed between 2 November 2024 until 2 August 2026.

- Table B: 7 items of either new national laws and of secondary legislation that Member States could introduce or where they may support the Commission. Some of those items feature clear deadlines, others depend on the Member States’ discretion.

- Table C: 55 categories of enforcement activities on national level, some of them will need to be executed already from 2 February 2025 onwards.

- Table D: 8 tasks with the aim to conduct ex-post evaluation of the AI Act, to be executed between 2025 until at least 2031.

I hope that this list is helpful for civil society, academics, and SMEs that do not have the necessary resources to monitor the implementation and enforcement of the AI Act on the EU level. These tables should allow them to identify their key priorities and to focus their activities with regards to monitoring Member States.

Introductory Remarks

1. Transition periods

According to Article 113, the EU AI Act enters into force on 1 August 2024, which is twenty days after its publication in the Official Journal of the European Union on 12 July 2024.

Consequently, the new law becomes applicable on 2 August 2026, which is twenty-four months from the date of the entry into force.

See here for a full implementation timeline which includes all key milestones listed here, and more.

There are however three special transition periods for certain categories of articles in the AI Act:

- Six months from the date of the entry into force of the AI Act (2 February 2025) Chapter I (Article 1 – 4 [Introduction]) and Chapter II (Article 5 [Prohibitions]) will apply.

- Twelve months from the date of the entry into force of the AI Act (2 August 2025) Chapter III (Article 28 -39 [Notified bodies]), Chapter V (Article 51 – 56 [GPAI]), Chapter VII (Article 64 – 70 [Governance]), Article 78 [Confidentiality], and Art 99 – 100 [penalties] will apply.

- Thirty-six months from the date of the entry into force of the AI Act (2 August 2027) Article 6(1), Annex I, and the corresponding obligations will apply.

2. AI systems or GPAI models that are already placed on the market or are put into service

Article 111 lays down specific rules for AI systems and GPAI models that have been already placed on the market / put into service before the AI Act entered into force. It presents three cases:

- AI systems which are components of large-scale IT systems (Annex X) and that have been placed on the market / put into service before 2 August 2027 need to be compliant with the AI Act by 31 December 2030.

- All other high-risk AI systems that have been placed on the market / put into service before 2 August 2026 need to be compliant with the AI Act once they are subject to significant changes in their design. If the provider or deployer of that high-risk AI system is however a public authority, it needs to be compliant with the AI Act by 2 August 2030.

- GPAI models that have been placed on the market / put into service before 2 August 2025 need to be compliant with the AI Act by 2 August 2027.

All time frames in the third column of the tables below assume that the AI system has been place on the market / put into service after 2 August 2026 or that the GPAI model has been placed on the market / put into service after 2 August 2025.

3. The AI governance system on national level

Recital 153 / 154 and Article 70 underline that Member States play a key role in the application and enforcement of the AI Act. Each one of them should designate at least one notifying authority and at least one market surveillance authority as national competent authorities. The market surveillance authority or one of them should thereby act as single point of contact.

Member States can appoint any kind of public entity (i.e. competition authority, data protection authority, cybersecurity agency) to perform the tasks of the national competent authorities, in accordance with their specific national organizational characteristics and needs. For instance, Germany decided to appoint its Federal Accreditation Body (‘Deutsche Akkreditierungsstelle’) as notifying authority and its Federal Network Agency (‘Bundesnetzagentur’) as market surveillance authority.

The national competent authorities should exercise their powers independently, impartially and without bias, to safeguard the principles of objectivity of their activities and tasks and to ensure the application and implementation of the AI Act. The members of these authorities should refrain from any action incompatible with their duties and should be subject to confidentiality rules.

In Article 3, we find the legal definitions with regard to the national implementation and enforcement system. Point (19) defines that ‘notifying authority’ are the national authority responsible for setting up and carrying out the necessary procedures for the assessment, designation and notification of conformity assessment bodies and for their monitoring. Point (26) defines that ‘market surveillance authority’ are the national authority carrying out the activities and taking the measures pursuant to Regulation (EU) 2019/1020. Lastly, point (48) defines that ‘national competent authority’ are a notifying authority or a market surveillance authority.

This document lists the responsibilities of each Member State as well as its national competent authorities, meaning their designated notifying authority and market surveillance authority. It does not specify which of the entities is fulfilling the respective task.

Responsibilities and Time Frames

These tables can also be viewed as an infographic (courtesy of Simone Mohrs).

Table A: Timeline for Establishing the AI Governance System (18 tasks)

| ID | Responsibility | Timeline |

|---|---|---|

| 1 | Recital 37 and Article 5(5): Decide on the question whether to provide fully or partially for the possibility to authorise the use of ‘real-time’ remote biometric identification system in publicly accessible spaces for the purpose of law enforcement in its detailed rules of national law, while meeting the conditions laid down in Art 5(1/2/3). If such national rules are introduced, notify the Commission at latest 30 days after. | Depending on the respective national decision. Rule of Art 113(a) applies, meaning that the related norms apply from 02 February 2025. |

| 2 | Recital 81 and Article 18(2): Determine conditions under which the documentation remains at the disposal of the national competent authorities for the period indicated in Article 18(1) for the cases when a provider or its authorized representative established on its territory goes bankrupt or ceases its activity prior to the end of that period. | No concrete time frame to fulfill this task. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 3 | Article 28(1): Designate or establish at least one notifying authority responsible for setting up and carrying out the necessary procedures for the assessment, designation and notification of conformity assessment bodies and for their monitoring. There is the possibility to decide that the assessment and monitoring is to be carried out by a national accreditation body within the meaning of, and in accordance with, Regulation (EC) No 765/2008. | No concrete time frame to fulfill this task. Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 4 | Recital 123 – 125 and Article 43(1): Ensure that the market surveillance authority referred to in Article 74(8) or (9), as applicable, can act as a notified body for the purposes of the conformity assessment procedure referred to in Annex VII, where the high-risk AI system is intended to be put into service by law enforcement, immigration or asylum authorities or by Union institutions, bodies, offices or agencies. | No concrete time frame to fulfill this task. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 5 | Recital 138 and Article 57(1-3): Ensure that the respective national competent authorities establish at least one AI regulatory sandbox at national level to facilitate the development and testing of innovative AI systems under strict regulatory oversight before these systems are placed on the market or otherwise put into service. There is the possibility to fulfil this obligation by participating in already existing regulatory sandboxes or establishing jointly a sandbox with one or more Member States’ competent authorities, insofar as this participation provides equivalent level of national coverage for the participating Member States. Besides, there is also the possibility to establish sandbox in physical, digital or hybrid form and accommodate physical as well as digital products. Inform the AI Office and the Board of the establishment of a regulatory sandbox. If deemed necessary, ask them for support and guidance. | No concrete time frame to fulfill this task. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 6 | Recital 138 and Article 57(4): Ensure that the competent authorities for the regulatory sandbox receive sufficient resources to comply with Article 57 effectively and in a timely manner. Ensure an appropriate level of cooperation between the authorities supervising other sandboxes and the national competent authorities. | No concrete time frame to fulfill this task. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 7 | Recital 142: Promote research and development of AI solutions in support of socially and environmentally beneficial outcomes, such as AI-based solutions to increase accessibility for persons with disabilities, tackle socio-economic inequalities, or meet environmental targets, by allocating sufficient resources, including public and Union funding, and, where appropriate and provided that the eligibility and selection criteria are fulfilled, considering in particular projects which pursue such objectives. | No concrete time frame to fulfill this task. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 8 | Recital 148 and Article 64(2): Facilitate the tasks of the AI Office with a view to support the development of Union expertise and capabilities at Union level and to strengthen the functioning of the digital single market. | No concrete time frame to fulfill this task. Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 9 | Recital 149 and Article 65(3): Designate one representative for the AI Board for a period of three years, renewable once. Such representatives may be any persons belonging to public entities, who should have the relevant competences and powers to facilitate coordination at national level and contribute to the achievement of the Board’s tasks. | No concrete time frame to fulfill this task. Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 10 | Recital 153 / 154 and Article 70(1-5): Establish or designate at least one notifying authority and at least one market surveillance authority as national competent authorities for the purpose of supervising the application and implementation of this Regulation. Designate the or one of the market surveillance authorities to act as the single point of contact for the AI Act. Ensure that the national competent authorities are provided with adequate technical, financial and human resources, and with infrastructure to fulfil their tasks effectively under this Regulation. Besides, ensure an adequate level of cybersecurity. Communicate to the Commission the identity of the notifying authorities and the market surveillance authorities and the tasks of those authorities, as well as any subsequent changes thereto. Notify the Commission also of the single point of contact for the AI Act. | No concrete time frame to fulfill this task. Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 11 | Recital 153 / 154 and Article 70(2): Make publicly available information on how competent authorities and single points of contact can be contacted, through electronic communication means. | To be completed until 02 August 2025. |

| 12 | Recital 131 and Article 71(1): Support the Commission in setting up and maintaining the EU database. | No concrete time frame to fulfill this task. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 13 | Recital 158: Designate the competent authorities for the supervision and enforcement of the financial services legal acts, in particular competent authorities as defined in Regulation (EU) No 575/2013 and Directives 2008/48/EC, 2009/138/EC, 2013/36/EU, 2014/17/EU and (EU) 2016/97, within their respective competences, as competent authorities for the purpose of supervising the implementation of the AI Act, including for market surveillance activities, as regards AI systems provided or used by regulated and supervised financial institutions unless it is decide to designate another authority to fulfil these market surveillance tasks. Provide them with all the powers under the AI Act and Regulation (EU) 2019/1020 to enforce the requirements and obligations of the AI Act, including powers to carry our ex-post market surveillance activities that can be integrated, as appropriate, into their existing supervisory mechanisms and procedures under the relevant Union financial services law. | No concrete time frame to fulfill this task. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 14 | Recital 156 and Article 74(8): Designate as market surveillance authorities for the purposes of the AI Act either the competent data protection supervisory authorities under Regulation (EU) 2016/679 or Directive (EU) 2016/680, or any other authority designated pursuant to the same conditions laid down in Articles 41 to 44 of Directive (EU) 2016/680 for high-risk AI systems listed in point 1, 6, 7, 8 of Annex III. | No concrete time frame to fulfill this task. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 15 | Recital 156 and Article 74(10): Facilitate coordination between market surveillance authorities designated under the AI Act and other relevant national authorities or bodies, which supervise the application of Union harmonization legislation listed in Annex I, or in other Union law, that might be relevant for the high-risk AI systems referred to in Annex III. | No concrete time frame to fulfill this task. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 16 | Recital 157 and Article 77(2): Identify the public authorities or bodies, which supervise or enforce the respect of obligations under Union law protecting fundamental rights and make a list of them publicly available. Notify the list to the Commission and to the other Member States, and keep the list up to date. | To be completed until 02 November 2024. |

| 17 | Recital 170 and Article 85: Create a mechanism so that any natural or legal person that has grounds to consider that there has been an infringement of the AI Act is entitled to lodge a complaint to the relevant market surveillance authority. | No concrete time frame to fulfill this task. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 18 | Recital 168 / 179 and Article 99, 113: Lay down the rules on penalties and other enforcement measures, which may also include warnings and non-monetary measures, applicable to infringements of the AI Act by operators. Notify to the Commission the rules on penalties, including administrative fines and any subsequent amendment to them. | To be completed until 02 August 2025. |

Table B: Timeline for National Law and Secondary Legislation (7 items)

| ID | Responsibility | Timeline |

|---|---|---|

| 1 | Recital 23 and Article 2(11): Maintain or introduce laws, regulations or administrative provisions, which are more favourable to workers in terms of protecting their rights in respect of the use of AI systems by employers, or encouraging or allowing the application of collective agreements, which are more favourable to workers. | Only if deemed necessary. |

| 2 | Recital 20 and Article 4: Facilitate, in cooperation with the relevant stakeholders and the Commission, the drawing up of voluntary codes of conduct to advance AI literacy among persons dealing with the development, operation and use of AI. | No concrete time frame to fulfill this task. Rule of Art 113(a) applies, meaning that the related norms apply from 02 February 2025. |

| 3 | Recital 96 and Article 27(10): Introduce, in accordance with Union law, more restrictive laws on the use of post- remote biometric identification systems. | Only if deemed necessary. |

| 4 | Recital 116 and Article 56(3): Cooperate with the AI Office when it encourages and facilitates the drawing up, review and adaptation of codes of practice. | No concrete time frame to fulfill this task. Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 5 | Recital 165 and Article 95: Encourage and facilitate together with the Commission the drawing up of codes of conduct, including related governance mechanisms, intended to foster the voluntary application to AI systems, other than high-risk AI systems, of some or all of the requirements set out in Chapter III, Section 2 taking into account the available technical solutions and industry best practices allowing for the application of such requirements. | No concrete time frame to fulfill this task. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 6 | Article 96(2): Request from the Commission to update its previously adopted guidelines. | Only if deemed necessary. |

| 7 | Recital 173 and Article 97(4): Participate in a consultation with the Commission before it is adopting delegated acts. | Once the Commission decides to draft a delegated act. |

Table C: Enforcement Activities (55 categories)

| ID | Responsibility | Timeline |

|---|---|---|

| 1 | Recital 36 and Article 5(4): Receive and register each notification about the use of a ‘real-time’ remote biometric identification system in publicly accessible spaces for law enforcement purposes on national level. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 2 | Recital 53 and Article 6(3-8): Request and receive the documentation and assessment from a provider, who considers that his or her AI system is not high-risk based on the conditions referred to in Article 6(3). | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 3 | Recital 81 and Article 20(2): Receive and register a notification from a provider that becomes aware that the high-risk AI system presents a risk within the meaning of Article 79(1). | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 4 | Recital 82 and Article 22: Request and register a copy of the mandate from the authorised representative. Receive as well notifications from the authorised representative that the mandate was terminated because he or she considered or had reason to consider that the provider was acting contrary to its obligations pursuant to the AI Act. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 5 | Recital 83 and Article 23: Receive notifications from the importer, who has sufficient reason to consider that a high-risk AI system is not in conformity with the AI Act, is falsified, or accompanied by falsified documentation and where the high-risk AI system presents a risk within the meaning of Article 79(1). | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 6 | Recital 91 – 95 and Article 26(5): Receive and register a notification from the deployer, where he or she has the reason to consider that the use of the high-risk AI system in accordance with the instructions may result in a situation, in which the AI system presents a risk within the meaning of Article 79(1) or where the deployer has identified a serious incident. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 7 | Recital 91 – 95 and Article 26(10): Request and register a notification in the relevant police file from the deployer about uses of a high-risk AI system for post-remote biometric identification. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 8 | Recital 96 and Article 27(3): Receive and register notification from deployers with regard to their fundamental rights impact assessment. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 9 | Recital 126 and Article 29–31: Receive and assess an application for notification from a conformity assessment body. Only notify those conformity assessment bodies that are meeting the requirements of Article 31. Provide the required documentation and inform the Commission and the other Member States, using the electronic notification tool if it was decided to notify the conformity assessment body. Other Member States can object to the notification procedure according to Article 30(4/5). | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 10 | Recital 126 and Article 33(4): Receive and assess the relevant documents concerning the assessment of the qualifications of the subcontractor or the subsidiary and the work carried out by them under the AI Act. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 11 | Recital 126 and Article 34(3): Receive and assess the relevant documentation, including the providers’ documentation, to allow conducting an assessment, designation, notification and monitoring activities, and to facilitate the assessment outlined in Art 29-39. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 12 | Recital 126 and Article 36: Notify the Commission and the other Member States of any relevant changes to the notification of a notified body via the electronic notification tool referred to in Article 30(2). Withdraw the designation where the notified body has ceased its activity or investigate where there is sufficient reason to consider that the notified body no longer meets the requirements laid down in Article 31, or that it is failing to fulfil its obligations. Where it is concluded that the notified body no longer meets the requirements laid down in Article 31 or that it is failing to fulfil its obligations, the designation should be restricted, suspended or withdrawn as appropriate, depending on the seriousness of the failure to meet the requirements or fulfil the obligations. In that case, assess the impact on issued certificates and submit a report to the Commission and other Member States. Require the suspension of certificates and inform the Commission and other Member States, providing documentation. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 13 | Recital 126 and Article 37: Provide the Commission, on request, with all relevant information relating to the notification or the maintenance of the competence of the notified body concerned. Receive and assess the findings of the Commission on the notified body that does not meet the requirements for notification. Take the necessary corrective measures, including the suspension or withdrawal of the notification if necessary. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 14 | Recital 126 and Article 38: Ensure that the bodies that have been notified, participate in the work of the group referred to in Article 38(1), directly or through designated representatives. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 15 | Recital 121 and Article 41(6): Inform the Commission with a detailed explanation if it was assessed that the common specification does not meet the requirements of section 2 and 3 of the high-risk chapter. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 16 | Article 45(1): Request and assess the information provided by notified bodies based on Article 45(1). | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 17 | Recital 130 and Article 46: Authorize the placing on the market or the putting into service of AI systems, which have not undergone a conformity assessment, but only if exceptional reasons apply (e.g. public security or protection of life and health of natural persons, environmental protection and the protection of key industrial and infrastructural assets). Inform the Commission and the other Member States of any authorization that has been issued. Withdraw the derogation if the Commission considers the authorization unjustified. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 18 | Article 47(1): Receive and register the copy of the EU declaration of conformity submitted by providers. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 19 | Recital 101 and Article 53(1a): Request the technical documentation from the provider of a GPAI model. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 20 | Recital 115 and Article 55(1c): Receive the notification from the provider of a systemic GPAI model if the development or use of the model causes a serious incident, including information on the incident and on possible corrective measures. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 21 | Recital 137 / 138 and Article 57(6): Provide, as appropriate, guidance, supervision and support within the AI regulatory sandbox with a view to identifying risks, in particular to fundamental rights, health and safety, testing, mitigation measures, and their effectiveness in relation to the obligations and requirements of this Regulation and, where relevant, other Union and national law supervised within the sandbox. Provide guidance on regulatory expectations and how to fulfil the requirements and obligations set out in the AI Act to providers and prospective providers that are participating in the AI regulatory sandbox. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 22 | Recital 137 / 138 and Article 57(7): Provide to the provider a written proof of the activities successfully carried out in the sandbox. Provide also exit reports (detailing the activities carried out in the sandbox and the related results and learning outcomes). If both the provider or prospective provider and the national competent authority explicitly agree, the exit report may be made publicly available through the single information platform referred to in Article 57. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 23 | Recital 137 / 138 and Article 57(10): Ensure that, to the extent the innovative AI systems involve the processing of personal data or otherwise fall under the supervisory remit of other national authorities or competent authorities providing or supporting access to data, the national data protection authorities and those other national or competent authorities are associated with the operation of the AI regulatory sandbox and involved in the supervision of those aspects to the extent of their respective tasks and powers. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 24 | Recital 137 / 138 and Article 57(11): React on significant risks identified during the development and testing of AI systems by requesting adequate mitigation and, failing that, initiate the suspension of the development and testing process, temporarily or permanently. Inform the AI Office of such decision. Exercise the supervisory powers within the limits of the relevant law, using the discretionary powers when implementing legal provisions in respect of a specific AI regulatory sandbox project, with the objective of supporting innovation in AI in the Union. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 25 | Recital 137 / 138 and Article 57(14): Coordinate the activities and cooperate within the framework of the Board with other Member States. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 26 | Recital 139 and Article 58(4): Specifically agree the terms and conditions of real-world testing and, in particular, the appropriate safeguards with the participants, with a view to protecting fundamental rights, health and safety, before authorising it under supervised conditions within the framework of an AI regulatory sandbox. Cooperate with other national competent authorities with a view to ensuring consistent practices across the Union. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 27 | Recital 140 and Article 59: Assess the safeguards and cooperate with providers and prospective providers in the AI regulatory sandbox that want to use personal data, including by issuing guidance and monitoring the mitigation of any identified significant risks to safety, health, and fundamental rights that may arise during the development, testing and experimentation in that sandbox. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 28 | Recital 141 and Article 60(4): Require from providers and prospective providers to provide information including their real-world testing plans before the activities by the provider are conducted. If adequate, approve the testing in real world conditions and the real- world testing plan. Decide on extending the testing under real world conditions after six months for maximum an additional period of six months, subject to prior notification by the provider or prospective provider, accompanied by an explanation of the need for such an extension. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 29 | Recital 141 and Article 60(6): Carry out unannounced remote or on-site inspections, and perform checks on the conduct of the testing in real world conditions and the related high-risk AI systems. Use those powers to ensure the safe development of testing in real world conditions. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 30 | Recital 141 and Article 60(7/8): Receive and access notifications on serious incident identified in the course of the testing in real world conditions by the provider. Receive and assess the notification on the suspension or termination and of the final outcome of the testing in real world conditions by providers or prospective providers. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 31 | Recital 143 and Article 62(1a): Provide SMEs, including start-ups, that have a registered office or a branch in the Union, with priority access to the AI regulatory sandboxes provided that they fulfil the eligibility conditions and selection criteria and without precluding other providers and prospective providers to access the sandboxes provided the same conditions and criteria are fulfilled. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 32 | Recital 143 and Article 62(1b/c): Organise specific awareness raising and training activities on the application of the AI Act tailored to the needs of SMEs including start-ups, deployers and, as appropriate, local public authorities. Utilise existing channels and where appropriate, establish new dedicated channels for communication with SMEs, including start-ups, deployers, other innovators and, as appropriate, local public authorities, to support SMEs throughout their development path by providing guidance and responding to queries about the implementation of this Regulation. Where appropriate, these channels should work together to create synergies and ensure homogeneity in their guidance to SMEs, including start-ups, and deployers. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 33 | Recital 143 and Article 62(1d): Facilitate the participation of SMEs and other relevant stakeholders in the standardisation development processes. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 34 | Recital 149 and Article 66(o): Send opinions to the AI Board on qualified alerts regarding GPAI models, and on national experiences and practices on the monitoring and enforcement of AI systems, in particular systems integrating the general-purpose AI models. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 35 | Recital 151 and Article 68 / 69: Request support from the pool of experts constituting the scientific panel for the enforcement activities | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 36 | Recital 153 / 54 and Article 70(5): Act in accordance with the confidentiality obligations set out in Article 78, when performing its tasks. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 37 | Recital 153 / 154 and 70(8): Provide guidance and advice on the implementation of the AI Act, in particular to SMEs including start-ups, taking into account the guidance and advice of the AI Board and the Commission, as appropriate. Whenever guidance and advice with regard to an AI system in areas covered by other Union law is provided, the national competent authorities under that Union law shall be consulted, as appropriate. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 38 | Recital 155 and Article 73: Receive the reports on serious incidents from providers of high-risk AI systems. Inform the national public authorities or bodies referred to in Article 77(1), when receiving a notification related to a serious incident referred to in Article 3, point (49)(c). Take appropriate measures, as provided for in Article 19 of Regulation (EU) 2019/1020, within seven days from the date of receiving the notification referred to in Article 73(1) and follow the notification procedures as provided in that Regulation. Immediately notify the Commission of any serious incident, whether or not they have taken action on it, in accordance with Article 20 of Regulation (EU) 2019/1020. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 39 | Recital 156 / 160 and Article 74(11-14): Propose joint activities, including joint investigations, to be conducted by market surveillance authorities or market surveillance authorities jointly with the Commission, that have the aim of promoting compliance, identifying non- compliance, raising awareness and providing guidance in relation to this Regulation with respect to specific categories of high-risk AI systems that are found to present a serious risk across two or more Member States. Joint activities to promote compliance should be carried out in accordance with Article 9 of Regulation (EU) 2019/1020. If necessary, request full access by providers to the documentation as well as the training, validation and testing data sets used for the development of high-risk AI systems, including, where appropriate and subject to security safeguards, through application programming interfaces (API) or other relevant technical means and tools enabling remote access. Request to access the source code of the high-risk AI system if the access to source code is necessary to assess the conformity of a high-risk AI system with the requirements set out in Chapter III, Section 2 and testing or auditing procedures and verifications based on the data and documentation provided by the provider have been exhausted or proved insufficient. Treat any information or documentation obtained in accordance with the confidentiality obligations set out in Article 78. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 40 | Recital 161 and Article 75(2/3): Cooperate with the AI Office to carry out evaluations of compliance and inform the Board and other market surveillance authorities accordingly in case there is a GPAI system that can be used directly by deployers for at least one purpose that is classified as high-risk and there are sufficient reasons to consider that it is non-compliant. Request assistance from the AI Office where the national level is unable to conclude an investigation on a high-risk AI system because of its inability to access certain information related to the GPAI model on which the high-risk AI system is built. In such cases, the procedure regarding mutual assistance in cross-border cases in Chapter VI of Regulation (EU) 2019/1020 should apply mutatis mutandis. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 41 | Article 76: Ensure that testing in real world conditions is in accordance with the AI Act. Verify that the testing in real world conditions is conducted in compliance with Article 60. Option to allow the testing in real world conditions to be conducted by the provider or prospective provider, in derogation from the conditions set out in Article 60(4), points (f) and (g). Suspend or terminate the testing or require modifications if informed of a serious incident or there are other grounds for considering that the conditions set out in Articles 60 and 61 are not met. Indicate the grounds for a decision or rejection and how the provider or prospective provider can challenge the decision or objection. Communicate the grounds therefor to the market surveillance authorities of other Member States in which the AI system has been tested in accordance with the testing plan. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 42 | Recital 157 and Article 77: Request and access any documentation created or maintained under the AI Act in accessible language and format when access to that documentation is necessary for effectively fulfilling the mandates within the limits of their jurisdiction. The relevant public authority or body shall inform the market surveillance authority of the Member State concerned of any such request. Organise testing of the high-risk AI system through technical means, where the documentation referred to in Article 77(1) is insufficient to ascertain whether an infringement of obligations under Union law protecting fundamental rights has occurred. Organise the testing with the close involvement of the requesting public authority or body within a reasonable time following the request. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 43 | Recital 167 and Article 78(3): Exchange, where necessary and in accordance with relevant provisions of international and trade agreements, confidential information with regulatory authorities of third countries with which the Member State has concluded bilateral or multilateral confidentiality arrangements guaranteeing an adequate level of confidentiality. Ensure that the market surveillance authorities referred to in Article 74(8) and (9), as applicable, can, upon request, immediately access the documentation or obtain a copy thereof. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

| 44 | Article 79: Carry out an evaluation of the AI system concerned in respect of its compliance with all the requirements and obligations laid down in the AI Act, where there is sufficient reason to consider an AI system to present a risk as referred to in Article 79(1). Particular attention shall be given to AI systems presenting a risk to vulnerable groups. Inform and fully cooperate with the relevant national public authorities or bodies referred to in Article 77(1), where risks to fundamental rights are identified. Take all appropriate corrective actions to bring the AI system into compliance, to withdraw the AI system from the market, or to recall it in any event, where, in the course of that evaluation, the market surveillance authority or, where applicable the market surveillance authority in cooperation with the national public authority referred to in Article 77(1), finds that the AI system does not comply with the requirements and obligations laid down in this Regulation. Inform the relevant notified body accordingly and the Commission and the other Member States of the results of the evaluation and of the actions which it has required the operator to take, where the market surveillance authority considers that the non-compliance is not restricted to its national territory. Inform the Commission and the other Member States of any measures adopted and of any additional information at their disposal relating to the non-compliance of the AI system concerned, and, in the event of disagreement with the notified national measure, of their objections. Take all appropriate provisional measures to prohibit or restrict the AI system’s being made available on its national market or put into service, to withdraw the product or the standalone AI system from that market or to recall it, where the operator of an AI system does not take adequate corrective action. Notify the Commission and the other Member States of those measures and describe the background as indicated in Article 79(6). Inform the Commission and the other Member States of any measures adopted and of any additional information at their disposal relating to the non-compliance of the AI system concerned, and, in the event of disagreement with the notified national measure, of their objections. Ensure that appropriate restrictive measures are taken in respect of the product or the AI system concerned, such as withdrawal of the product or the AI system from their market, without undue delay. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 45 | Recital 158: Report, without delay, to the European Central Bank any information identified in the course of the market surveillance activities that may be of potential interest for the European Central Bank’s prudential supervisory tasks as specified in Council Regulation (EU) No 1024/2013. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 46 | Recital 159: Use effective investigative and corrective powers, including at least the power to obtain access to all personal data that are being processed and to all information necessary for the performance of its tasks, with regard to high-risk AI systems in the area of biometrics, as listed in an annex to the AI Act insofar as those systems are used for the purposes of law enforcement, migration, asylum and border control management, or the administration of justice and democratic processes. Exercise the powers by acting with complete independence. Any limitations of their access to sensitive operational data under this Regulation should be without prejudice to the powers conferred to them by Directive (EU) 2016/680. No exclusion on disclosing data to national data protection authorities under this Regulation should affect the current or future powers of those authorities beyond the scope of this Regulation. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 47 | Article 80: Carry out an evaluation of the AI system concerned in respect of its classification as a high-risk AI system based on the conditions set out in Article 6(3) and the Commission guidelines, where there is sufficient reason to consider that an AI system classified by the provider as non-high-risk pursuant to Article 6(3) is indeed high-risk. Require the relevant provider to take all necessary actions to bring the AI system into compliance with the requirements and obligations laid down in the AI Act, as well as take appropriate corrective action, where, in the course of that evaluation, it is being assessed that the AI system concerned is high-risk. Inform the Commission and the other Member States without undue delay of the results of the evaluation and of the actions which it has required the provider to take, where the market surveillance authority considers that the use of the AI system concerned is not restricted to its national territory. Ensure that all necessary action is taken to bring the AI system into compliance with the requirements and obligations laid down in the AI Act. Where the provider of an AI system concerned does not bring the AI system into compliance with those requirements and obligations within the period referred to in Article 80(2), the provider shall be subject to fines in accordance with Article 99. Where, in the course of the evaluation pursuant to Article 80(1), it is being establishes that the AI system was misclassified by the provider as non-high- risk in order to circumvent the application of requirements in Chapter III, Section 2, the provider shall be subject to fines in accordance with Article 99. Perform appropriate checks, taking into account in particular information stored in the EU database referred to in Article 71, when exercising the power to monitor the application of Article 80, and in accordance with Article 11 of Regulation (EU) 2019/1020. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 48 | Article 81(1/2): Raise an objection, within three months of receipt of the notification referred to in Article 79(5), or within 30 days in the case of non-compliance with the prohibition of the AI practices referred to in Article 5, to a measure taken by another market surveillance authority. Ensure taking appropriate restrictive measures in respect of the AI system concerned, such as requiring the withdrawal of the AI system from the market without undue delay and inform the Commission accordingly, where the Commission considers the measure taken by the relevant Member State to be justified and the objection to be not justified. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 49 | Article 81(2/2): Enter in consultation with the Commission after having received an objection by other Member States. Withdraw the measure and inform the Commission accordingly, where the Commission considers the national measure to be unjustified. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 50 | Article 82: Require the relevant operator to take all appropriate measures to ensure that the AI system concerned, when placed on the market or put into service, no longer presents that risk without undue delay, where, having performed an evaluation under Article 79, after consulting the relevant national public authority referred to in Article 77(1), it is being found that although a high-risk AI system complies with the AI Act, it nevertheless presents a risk to the health or safety of persons, to fundamental rights, or to other aspects of public interest protection. Inform the Commission and the other Member States of a finding under Article 82(1). That information shall include all available details, in particular the data necessary for the identification of the AI system concerned, the origin and the supply chain of the AI system, the nature of the risk involved and the nature and duration of the national measures taken. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 51 | Article 83: Require the relevant provider to put an end to the non-compliance concerned, if it is assessed that: (a) the CE marking has been affixed in violation of Article 48; (b) the CE marking has not been affixed; the EU declaration of conformity referred to in Article 47 has not been drawn up; (c) the EU declaration of conformity referred to in Article 47 has not been drawn up correctly; (d) the registration in the EU database referred to in Article 71 has not been carried out; (e) where applicable, no authorised representative has been appointed; (f) technical documentation is not available. Take appropriate and proportionate measures to restrict or prohibit the high-risk AI system being made available on the market or to ensure that it is recalled or withdrawn from the market without delay, where the non-compliance referred to in Article 83(1) persists. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 52 | Article 84: Request the support via the Union AI testing support structures, providing independent technical or scientific advice. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 53 | Article 85: Receive complaints from any natural or legal person that has grounds to consider that there has been an infringement of the provisions of the AI Act. Also take the complains systematically into account for the purpose of conducting market surveillance activities and handle them in line with the dedicated procedures established therefor. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 54 | Recital 162 and Article 88: Request from the AI Office to exercise the powers of enforcing against providers of GPAI models, where that is necessary and proportionate to assist with the fulfilment of their tasks under the AI Act. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 55 | Recital 168 and Article 99 / 100: Take all measures necessary to ensure that they fines are properly and effectively implemented, thereby taking into account the guidelines issued by the Commission pursuant to Article 96. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. |

Table D: Ex-Post Evaluation (8 tasks)

| ID | Responsibility | Timeline |

|---|---|---|

| 1 | Recital 36 and Article 5(4/6): Submit to the Commission an annual report on the use of real-time biometric identification systems. | Rule of Art 113(a) applies, meaning that the related norms apply from 02 February 2025. Consequently, the first annual report by Member States should be published on 02 February 2026. |

| 2 | Recital 91 – 95 and Article 26(10): Receive and assess the annual reports from deployers on their use of post- remote biometric identification systems, excluding the disclosure of sensitive operational data related to law enforcement. | No concrete deliverables. General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. |

| 3 | Recital 138 and Article 57(16): Submit annual reports to the AI Office and to the AI Board as well as a final report. Those reports shall provide information on the progress and results of the implementation of regulatory sandboxes, including best practices, incidents, lessons learnt and recommendations on their setup and, where relevant, on the application and possible revision of the AI Act, including its delegated and implementing acts, and on the application of other Union law supervised by the competent authorities within the sandbox. Make those annual reports or abstracts thereof available to the public. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. Consequently, the first report has to be finalized on 02 August 2027 and every year thereafter until the regulatory sandbox is terminated. |

| 4 | Recital 153 / 154 and Article 70(3): Assess and, if necessary, update the national competent authorities’ competences and resource requirements referred to in Article 70(3) on an annual basis. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. Consequently, the first annual assessment by Member States should be done by 02 August 2026. |

| 5 | Recital 153 / 154 and Article 70(6): Report to the Commission on the status of the financial and human resources of the national competent authorities, with an assessment of their adequacy. | To be done on 02 August 2025 and once every two years thereafter. |

| 6 | Recital 156 and Article 74(2) Report annually to the Commission and relevant national competition authorities any information identified in the course of market surveillance activities that may be of potential interest for the application of Union law on competition rules. Also inform the Commission about the use of prohibited practices that occurred during that year and about the measures taken. | General rule of Art 113 applies, meaning that the related norms apply from 02 August 2026. Consequently, the first report has to be finalized on 02 August 2027 and every year thereafter. |

| 7 | Recital 168 and Article 99(11): Report to the Commission about the administrative fines that have been issued during the year, in accordance with Article 99, and about any related litigation or judicial proceedings. | Rule of Art 113(b) applies, meaning that the related norms apply from 02 August 2025. Consequently, the first annual report by Member States should be concluded by 02 August 2026. |

| 8 | Recital 174 and Article 112(8): Provide the Commission with information upon its request and without undue delay for the evaluation tasks in Article 112. | Only if requested by the Commission. |

If you found this post useful, you may also wish to see our post on the responsibilities of the European Commission (AI Office).

Corrections: Please let us know if you find any mistakes. Due to the complexity of this project some details may have been overlooked. This post will be updated according to new information and user feedback.