Browse the full text of the AI Act online using our AI Act Explorer

EU AI Act Compliance Checker

The EU AI Act introduces new obligations to entities located within the EU and elsewhere. Use our interactive tool to determine whether or not your AI system will be subject to these.

If you would like to stay updated about your obligations under the EU AI Act, we recommend subscribing to the EU AI Act Newsletter.

For further clarity, we recommend that you seek professional legal advice and follow national guidance. More information about EU AI Act enforcement in your country will likely be provided in 2024.

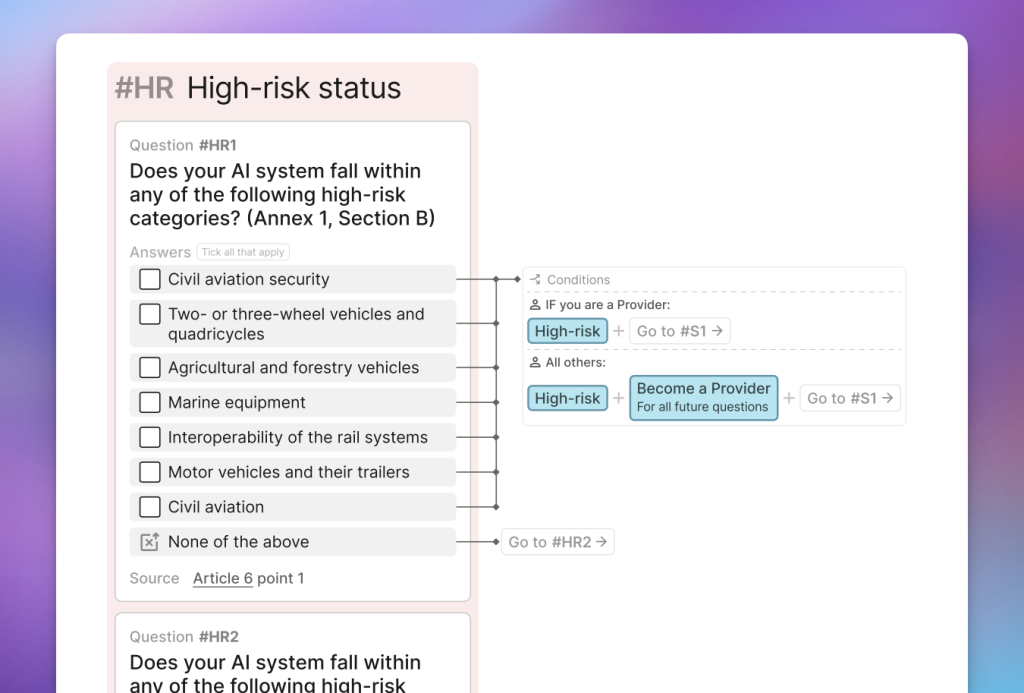

View the Compliance Checker as a PDF Flowchart NEW

See a visual overview of the full form logic, and save it for offline access.

Feedback – We are working to improve this tool. Please send feedback to Taylor Jones at taylor@futureoflife.org

View the official text, or browse it online using our AI Act Explorer. The text used in this tool is the ‘Artificial Intelligence Act (Regulation (EU) 2024/1689), Official Journal version of 13 June 2024’. Interinstitutional File: 2021/0106(COD)

Changelog

3 July 2025

- Distributor and Importer obligations are now only shown if the user’s AI system is high-risk.

- Provided a full description of obligations for Providers of high-risk AI systems.

- Updated the description of obligations for Deployers to more accurately reflect the legal text.

25 April 2025

- Added a question and obligations for ‘Providers of General Purpose AI Models with Systemic Risk’.

16 April 2025

- Previously, Authorised Representatives were treated as Providers. Now they have distinct form logic, and only receive Authorised Representative obligations.

- Product Manufacturers now receive questions related to scope before assessing whether their system is high-risk.

- Added specific obligations for Product Manufacturers.

- Added a note to inform users that they may be considered an ‘operator’ according to Article 3, and therefore have corresponding obligations.

- Clarified the language around transparency obligations.

- Clarified the language around scope for Importers.

- Removed a redundant scope option.

- Merged the ‘General Purpose AI models’ question into the ‘Scope’ question to streamline the form for providers.

15 April 2025

- Added ‘AI Literacy’ obligations for providers and deployers.

- Added ‘Fundamental rights impacts assessment’ question and obligations for deployers of high-risk AI systems.

- Modified language around ‘General Purpose AI models’ to ensure there was no confusion between ‘models’ and ‘systems’.

- Clarified the language around prohibited AI systems, particularly around emotion recognition systems.

- Clarified the language around exemptions for ‘personal, non-professional activities’ and for ‘Public authorities or international organisations in third countries’.

1 April 2025

- Performed a significant refactor of the form ordering in order to prevent bugs occuring with some edge cases. In particular, moved the ‘high risk’ questions much earlier in the form, so that a determination can be made much earlier about whether certain entities ‘become a provider’ according to Articles 22 and 25.

26 March 2024

- Fixed a bug which prevented Deployers from receiving their obligations under Article 29.

- Removed the following notice in the ‘High-risk Obligations’ result: “An additional exclusion for systems that are “purely accessory in respect of the relevant action or decision to be taken” will be defined no later than one year after the AI Act is introduced.” This exclusion is no longer present in Article 7 of the final draft.

11 March 2024

- Updated the Compliance Checker to reflect the ‘Final draft’ of the AI Act.

Data Privacy: In order to email your results to you we are required to store your inputs from this form, including your email address. If you do not use the email function, none of your data will be stored. We do not store any other data that could be used to identify you or your device. If you wish to remain anonymous, please use an email address that does not reveal your identity. We do not share any of your data with any other parties. If you would like your data to be deleted from our servers, please contact taylor@futureoflife.org

Acknowledgements:

The logic for this form was stress-tested by Tomas Bueno Momcilovic, Scientific Researcher at Fortiss. Japanese translation was provided by Citadel AI.

Frequently Asked Questions

Who developed this tool, and why?

This tool is developed by the team at the Future of Life Institute. We are in no way affiliated with the European Union. We have developed this tool voluntarily in order to aid the effective implementation of the AI Act, because we believe the AI Act supports our mission to ensure that AI technology remains beneficial for life, and avoids extreme large-scale risks.

Can I integrate this tool in my own website?

Our tool is built in a way that makes it difficult to support implementation on other sites. We do not have an API available. Therefore, for almost all cases, we suggest that you make this tool available to users of your website by either:

A) Linking to this webpage – you are welcome to use these mockup images to feature the tool on your site.

B) Embedding the full-page version of this tool within your site as an iframe. Please note that iframes can be difficult to use on mobile devices, and will add new cookies and scripts to your users’ devices. Therefore this method is not recommended.

When does the EU AI Act come into effect?

The EU AI Act came into force on 1 August 2024. There now follows an implementation period of two to three years as various parts of the Act come into force on different dates. Our implementation timeline provides an overview of all key dates relating to the Act’s implementation.

During the implementation period, the European standards bodies are expected to develop standards for the AI Act.

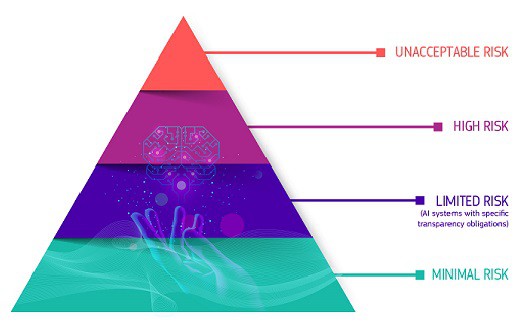

What are the categories of risk defined by the EU AI Act?

The Act’s regulatory framework defines four levels of risk for AI systems: unacceptable, high, limited, and minimal or no risk. Systems posing unacceptable risks, such as threatening people’s safety, livelihood, and rights – from social scoring by governments to toys using voice assistance – will be prohibited. High-risk systems, such as those used in critical infrastructure or law enforcement, will face strict requirements, including around risk assessment, data quality, documentation, transparency, human oversight, and accuracy. Systems posing limited risks, like chatbots, must adhere to transparency obligations so users know they are not interacting with humans. Minimal risk systems like games and spam filters can be freely used.

What share of AI systems will fall into the high-risk category?

It is uncertain what share of AI systems will be in the high-risk category, as both the field of AI and the law are still evolving. The European Commission estimated in an impact study that only 5-15% of applications would be subject to stricter rules. A study by appliedAI of 106 enterprise AI systems found that 18% were high-risk, 42% low-risk, and 40% unclear if high or low risk. The system with unclear risk classification in this study were mainly within the areas of critical infrastructure, employment, law enforcement, and product safety. In a related survey of 113 EU AI startups, 33% of the startups surveyed believed that their AI systems would be classified as high-risk, compared to the 5-15% assessed by the European Commission.

What are the penalties of the EU AI Act?

Based on the European Parliament’s adopted position, using prohibited AI practices outlined in Article 5 can result in fines of up to €40 million, or 7% of worldwide annual turnover – whichever is higher. Not complying with the data governance requirements (under Article 10) and the requirements for transparency and provision of information (under Articles 13) can lead to fines up to €20 million, or 4% of worldwide turnover if that is higher. Non-compliance with any other requirements or obligations can result in fines of up to €10 million or 2% of worldwide turnover – again, whichever is higher. Fines are tiered depending on the provision violated, with prohibited practices receiving the highest penalties and other violations receiving lower maximum fines.

What measures does the EU AI Act implement for SMEs specifically?

The EU AI Act aims to provide support for SMEs and startups as specified in Article 55. Specific measures include granting priority access for SMEs and EU-based startups to regulatory sandboxes if eligibility criteria are met. Additionally, tailored awareness raising and digital skills development activities will be organised to address the needs of smaller organisations. Moreover, dedicated communication channels will be set up to offer guidance and respond to queries from SMEs and startups. Participation of SMEs and other stakeholders in the standards development process will also be encouraged. To reduce the financial burden of compliance, conformity assessment fees will be lowered for SMEs and startups based on factors like development stage, size, and market demand. The Commission will regularly review certification and compliance costs for SMEs/startups (with input from transparent consultations) and work with Member States to reduce these costs where possible.

Can I voluntarily comply with the EU AI Act even if my system is not in scope?

Yes! The Commission, the AI Office, and/or Member States will encourage voluntary codes of conduct for requirements under Title III, Chapter 2 (e.g. risk management, data governance, and human oversight) for AI systems not deemed to be high-risk. These codes of conduct will provide technical solutions for how an AI system can meet the requirements, according to the system’s intended purpose. Other objectives like environmental sustainability, accessibility, stakeholder participation, and diversity of development teams will be considered. Small businesses and startups will be taken into account when encouraging codes of conduct. See Article 69 on Codes of Conduct for Voluntary Application of Specific Requirements.

Let us know what resources you need

We're looking for 2-minute feedback on the AI Act website, so that we can build the resources most helpful to you. We have produced many of the resources on our website in direct response to user feedback, sometimes within just 1-2 weeks.

This site exists with the aim of providing helpful, objective information about developments related to the EU AI Act. It is used by more than 150k users every month. Thank you in advance for your time and effort – hopefully we can pay it back in tailored, high-quality information addressing your needs.